KP Labs, in collaboration with the European Space Agency (ESA), European Space Operations Centre (ESOC), European Space Research and Technology Centre (ESTEC) and the MI2.AI team from Warsaw University of Technology, has debuted the PINEBERRY project.

The initiative promises to improve the safety, security, and transparency of artificial intelligence systems in space missions.

PINEBERRY stands for Explainable, Robust, and Secure AI for Demystifying Space Mission Operations. It focuses on two foundational pillars: explainable AI (XAI) and secure AI (SAI).

These elements are essential for addressing the challenges posed by the increasing reliance on AI in space missions. XAI ensures that AI systems remain transparent, providing human operators with clear insights into the decision-making processes of autonomous systems. SAI, on the other hand, safeguards these systems against threats that could compromise mission success, such as data corruption or adversarial attacks.

The project also emphasises that XAI is designed to explain AI models to users, researchers, and developers, helping them understand how these systems work and increasing trust in their reliability. Similarly, SAI protects systems from vulnerabilities like data poisoning, prompt injection attacks, and overreliance.

Space missions depend on reliable data transmission from spacecraft to Earth. Any instance of data corruption, whether intentional or accidental, can jeopardise mission-critical operations, ranging from spacecraft navigation to scientific experiments. PINEBERRY’s advanced techniques such as data sanitisation, robust training strategies (e.g., ensembling), and continuous model monitoring can be implemented to address these risks.

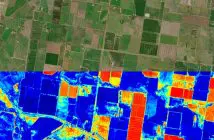

Additionally, ESA controllers require a deep understanding of AI systems’ decision-making processes, especially in high-stakes scenarios where human oversight is limited. The XAI methods developed within PINEBERRY demystify the black-box nature of AI models, providing transparency across diverse data types, including telemetry, natural language reports, and computer vision data.

“In the realm of space exploration, every decision made by autonomous systems must be secure and transparent,” said KP Labs Project Leader Krzysztof Kotowski. “PINEBERRY is our answer to the growing need for trustworthy AI solutions that can operate safely in the harshest environments of space. By combining security with explainability, we’re setting new standards for AI in space operations.”

The project’s innovations are demonstrated through realistic mission scenarios, prepared exactly for PINEBERRY, which showcase the implementation of advanced security and explainability techniques. Additionally, five proof-of-concept applications have been developed to tackle challenges in computer vision, time series data, and natural language processing. These solutions not only highlight opportunities for AI developers but also address vulnerabilities specific to ESA’s mission needs.

PINEBERRY also exemplifies the power of collaboration, so crucial in the space industry. While ESA oversees the project’s alignment with European space standards, KP Labs leads the technical development of secure and explainable AI frameworks. Warsaw University of Technology contributes cutting-edge research on AI security and explainability, and ESA’s ESOC validates these solutions through mission-specific scenarios. The project also reflects ESA’s recognition of KP Labs’ expertise and reinforces the importance of collaborative efforts to address the challenges of autonomous systems in space.